Optimizing Chunk Size in tsfresh for Enhanced Processing Speed

Last Updated :

11 Jul, 2024

tsfresh is a powerful Python library for extracting meaningful features from time series data. However, when dealing with large datasets, the feature extraction process can become computationally intensive. One key strategy to enhance tsfresh’s processing speed is by adjusting the chunksize parameter. In this article, we’ll explore how chunk size works, the factors influencing its optimal value, and practical steps to determine the best setting for your specific use case.

Understanding Chunk Size in tsfresh

The chunk size in tsfresh determines the number of tasks submitted to worker processes for parallelization. This parameter is crucial for optimizing the performance of feature extraction and selection. By default, tsfresh uses parallelization to distribute tasks across multiple cores, which can significantly speed up processing time. However, the chunk size must be carefully set to avoid over-provisioning and ensure efficient use of available resources.

The goal is to find the sweet spot where the overhead of managing chunks is minimized, while maximizing the benefits of parallel execution.

Why is Chunk Size Important?

Processing data can be speed up considerably by choosing the appropriate chunk size. If the pieces are too big it could be like attempting to carry too many novels at once on your computer. It could take an eternity to complete if the pieces are too little, similar to carrying one book at a time book at a time.

Factors Influencing Optimal Chunk Size

- Dataset Size: Larger datasets often benefit from larger chunk sizes, as it allows for better utilization of parallel processing resources.

- Hardware Resources: The number of CPU cores and available memory on your machine significantly impacts the optimal chunk size. More cores can handle larger chunks, while memory constraints may necessitate smaller chunks.

- Feature Calculation Complexity: If you’re extracting complex features that require substantial computation, smaller chunk sizes might be preferable to prevent individual chunks from becoming too demanding.

- Number of Time Series: If you have a large number of individual time series within your dataset, smaller chunk sizes can help distribute the workload more evenly across worker processes.

Setting Chunk Size in tsfresh : Step-by-Step Guide

Let’s install the tsfresh library. You can do this using pip (a tool for installing Python packages).

pip install tsfresh

Chunk Size Parameters

Parameter

| Description

|

|---|

column_id

| Identifier for each data series

|

|---|

column_sort

| Time column to sort the data

|

|---|

max_timeshift

| Size of each chunk

|

|---|

Let’s create an example code with step-by-step implementation to demonstrate how to set chunk size in tsfresh and visualize the process. We’ll use a simple dataset, such as daily temperature readings, and walk through each step with detailed explanations and visualizations.

Step 1: Install and Import Necessary Libraries

First, we’ll install tsfresh and import the necessary libraries.

Python

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from tsfresh import extract_features

from tsfresh.utilities.dataframe_functions import roll_time_series

- tsfresh: The main library we’ll use to extract features from time series data.

- pandas: For data manipulation and analysis.

- numpy: For numerical operations.

- matplotlib: For visualizing the data.

Step 2: Create a Sample Dataset

We’ll create a sample dataset of daily temperature readings for a month.

Python

# Generate a date range for one month

date_range = pd.date_range(start='2023-01-01', end='2023-01-31', freq='D')

# Generate random temperature data

temperature_data = np.random.uniform(low=20, high=30, size=len(date_range))

# Create a DataFrame

data = pd.DataFrame({'date': date_range, 'temperature': temperature_data})

# Display the first few rows of the dataset

print(data.head())

Output:

date temperature

0 2023-01-01 28.779975

1 2023-01-02 22.506181

2 2023-01-03 25.578607

3 2023-01-04 22.967865

4 2023-01-05 29.877910

This step creates a dataset with dates and corresponding random temperature readings.

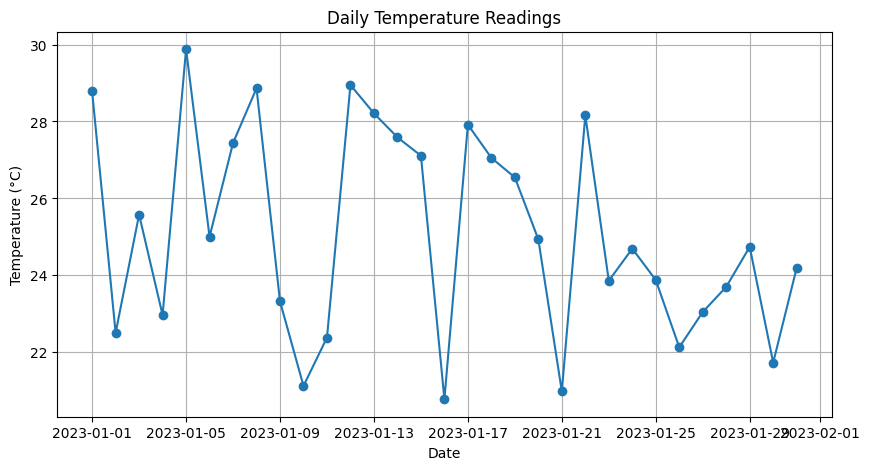

Step 3: Visualize the Dataset

We’ll plot the temperature data to understand its structure.

Python

# Plot the temperature data

plt.figure(figsize=(10, 5))

plt.plot(data['date'], data['temperature'], marker='o')

plt.title('Daily Temperature Readings')

plt.xlabel('Date')

plt.ylabel('Temperature (°C)')

plt.grid(True)

plt.show()

Output:

Temperature data

This visualization helps us see the fluctuations in temperature over the month.

Step 4: Add a Column ID and Time Column

For tsfresh to process the data, we need to add an ID and a time column.

Python

# Add an ID column (all data belongs to the same series)

data['id'] = 1

# Rename the date column to 'time' for tsfresh compatibility

data.rename(columns={'date': 'time'}, inplace=True)

# Display the updated DataFrame

print(data.head())

Output:

time temperature id

0 2023-01-01 28.779975 1

1 2023-01-02 22.506181 1

2 2023-01-03 25.578607 1

3 2023-01-04 22.967865 1

4 2023-01-05 29.877910 1

- id: An identifier for the data series.

- time: The time column required by tsfresh.

Step 5: Set Chunk Size Using roll_time_series

We’ll break the data into chunks using the roll_time_series function, with syntax:

chunked_data = roll_time_series(data, column_id='id', column_sort='time', max_timeshift=chunk_size)

- roll_time_series: This function breaks the data into chunks.

- column_id: Identifies the data series (all data has id=1).

- column_sort: The time column to sort the data (time).

- max_timeshift: The chunk size (7 days in this example).

Python

# Define the chunk size (e.g., 7 days)

chunk_size = 7

# Use roll_time_series to create chunks

chunked_data = roll_time_series(data, column_id='id', column_sort='time', max_timeshift=chunk_size)

# Display the first few rows of the chunked data

print(chunked_data.head())

Output:

Rolling: 100%|██████████| 31/31 [00:00<00:00, 496.67it/s] time temperature id

0 2023-01-01 28.779975 (1, 2023-01-01 00:00:00)

1 2023-01-01 28.779975 (1, 2023-01-02 00:00:00)

2 2023-01-02 22.506181 (1, 2023-01-02 00:00:00)

3 2023-01-01 28.779975 (1, 2023-01-03 00:00:00)

4 2023-01-02 22.506181 (1, 2023-01-03 00:00:00)

- max_timeshift: The size of each chunk (7 days in this example).

Now, we’ll extract features from the chunked data using tsfresh.

features = extract_features(chunked_data, column_id='id', column_sort='time')

- extract_features: This function extracts features from the chunked data.

- column_id: Identifies the data series.

- column_sort: The time column.

Python

# Extract features from the chunked data

features = extract_features(chunked_data, column_id='id', column_sort='time')

# Display the extracted features

print(features.head())

Output:

WARNING:tsfresh.feature_extraction.settings:Dependency not available for matrix_profile, this feature will be disabled!

Feature Extraction: 100%|██████████| 31/31 [00:01<00:00, 18.76it/s] temperature__variance_larger_than_standard_deviation \

1 2023-01-01 0.0

2023-01-02 1.0

2023-01-03 1.0

2023-01-04 1.0

2023-01-05 1.0

temperature__has_duplicate_max temperature__has_duplicate_min \

1 2023-01-01 0.0 0.0

2023-01-02 0.0 0.0

2023-01-03 0.0 0.0

2023-01-04 0.0 0.0

2023-01-05 0.0 0.0

temperature__has_duplicate temperature__sum_values \

1 2023-01-01 0.0 28.779975

2023-01-02 0.0 51.286156

2023-01-03 0.0 76.864764

2023-01-04 0.0 99.832629

2023-01-05 0.0 129.710538

temperature__abs_energy temperature__mean_abs_change \

1 2023-01-01 828.286963 NaN

2023-01-02 1334.815163 6.273794

2023-01-03 1989.080313 4.673110

2023-01-04 2516.603136 3.985654

2023-01-05 3409.292625 4.716752

temperature__mean_change \

1 2023-01-01 NaN

2023-01-02 -6.273794

2023-01-03 -1.600684

2023-01-04 -1.937370

2023-01-05 0.274484

temperature__mean_second_derivative_central \

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 4.673110

2023-01-04 0.915763

2023-01-05 2.197306

temperature__median ... temperature__fourier_entropy__bins_5 \

1 2023-01-01 28.779975 ... NaN

2023-01-02 25.643078 ... -0.000000

2023-01-03 25.578607 ... 0.693147

2023-01-04 24.273236 ... 1.098612

2023-01-05 25.578607 ... 1.098612

temperature__fourier_entropy__bins_10 \

1 2023-01-01 NaN

2023-01-02 -0.000000

2023-01-03 0.693147

2023-01-04 1.098612

2023-01-05 1.098612

temperature__fourier_entropy__bins_100 \

1 2023-01-01 NaN

2023-01-02 -0.000000

2023-01-03 0.693147

2023-01-04 1.098612

2023-01-05 1.098612

temperature__permutation_entropy__dimension_3__tau_1 \

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 -0.000000

2023-01-04 0.693147

2023-01-05 1.098612

temperature__permutation_entropy__dimension_4__tau_1 \

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 NaN

2023-01-04 -0.000000

2023-01-05 0.693147

temperature__permutation_entropy__dimension_5__tau_1 \

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 NaN

2023-01-04 NaN

2023-01-05 -0.0

temperature__permutation_entropy__dimension_6__tau_1 \

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 NaN

2023-01-04 NaN

2023-01-05 NaN

temperature__permutation_entropy__dimension_7__tau_1 \

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 NaN

2023-01-04 NaN

2023-01-05 NaN

temperature__query_similarity_count__query_None__threshold_0.0 \

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 NaN

2023-01-04 NaN

2023-01-05 NaN

temperature__mean_n_absolute_max__number_of_maxima_7

1 2023-01-01 NaN

2023-01-02 NaN

2023-01-03 NaN

2023-01-04 NaN

2023-01-05 NaN

[5 rows x 783 columns]

This step generates useful features from the chunked data.

We’ll visualize some of the extracted features to see the results.

Python

# Convert the index of the features DataFrame to a simple numeric type

features.index = range(len(features))

# Plot one of the extracted features (e.g., mean temperature)

plt.figure(figsize=(10, 5))

plt.plot(features.index, features['temperature__mean'], marker='o')

plt.title('Extracted Feature: Mean Temperature')

plt.xlabel('Chunk')

plt.ylabel('Mean Temperature (°C)')

plt.grid(True)

plt.show()

Output:

.png)

Extracted Features

This visualization shows how the mean temperature varies across the chunks.

Optimizing Chunk Size

The optimal chunk size depends on the specific use case and the computational resources available. Here are some guidelines:

- Small Chunk Size: Can lead to frequent context switching and overhead, reducing performance.

- Large Chunk Size: Can cause memory issues if the chunks are too large to fit into memory.

Experimenting with different chunk sizes and profiling the performance can help find the optimal setting.

Best Practices for Chunk Size Optimization

- Start with the Default Chunk Size: Begin with the default chunk size and adjust it based on your specific use case.

- Experiment with Different Chunk Sizes: Try different chunk sizes to find the optimal value for your dataset.

- Monitor Processing Times: Measure the processing times for different chunk sizes to determine the most efficient setting.

- Avoid Over-Provisioning: Ensure that the chunk size is not too large, leading to over-provisioning and inefficient resource utilization.

Conclusion

Setting the appropriate chunk size in tsfresh is crucial for optimizing processing speed and resource utilization. By understanding the impact of chunk size and leveraging parallelization, you can significantly enhance the performance of feature extraction tasks. Experiment with different settings and monitor the performance to find the optimal configuration for your specific use case.

FAQs

What if my data is not in a time series format?

Time series data is the primary use case for tsfresh. Make sure the data you have is organized with a time component.

How can I pick the appropriate chunk size?

The capacity of your computer, and the information it contains define the chunk size. Initially, choose a moderate size and then adjust it based on performance.

Can I utilize features other than mean temperature?

In fact, among other things, tsfresh gets the standard deviation, maximum, and minimum.

Please Login to comment...